Update 2016-11-22: Made the Makefile compatible with BSD sed (MacOS)

One advantage that static sites, such as those built by Hugo, provide is fast loading times. Because there is no processing to be done, no server side rendering, no database lookups, loading times are just as fast as you can serve the files that make up the page. This means that bandwidth becomes the primary bottleneck, which incidentally is one of the factors used by Google to calculate your search ranking. See also Pagespeed Insights.

Compressing images

Because the largest pieces of a page typically consist of images, it stands to reason that if we can make the images smaller, we can make the page load faster. Luckily there exists methods that can compress images losslessly. That means that the quality stays exactly the same, the page only loads faster. That seemed like a no-brainer to me so I compressed all the images on the site using PNGout as advised by Jeff Atwood. I mean, who doesn’t like free bandwidth?

A new algorithm called Zopfli (open sourced by Google, also mentioned by Jeff) claims even better results than PNGout though. Results on this site’s images confirm those claims. Running the tool on images already compressed by PNGout gives output such as this:

./zopflipng --prefix="zopfli_" static/images/2014/Dec/Screenshot-from-2014-12-29-13-28-29.png

Optimizing static/images/2014/Dec/Screenshot-from-2014-12-29-13-28-29.png

Input size: 89420 (87K)

Result size: 90361 (88K). Percentage of original: 101.052%

Preserving original PNG since it was smaller

./zopflipng --prefix="zopfli_" static/images/2014/Jun/Jenkins_install_git.png

Optimizing static/images/2014/Jun/Jenkins_install_git.png

Input size: 189406 (184K)

Result size: 166362 (162K). Percentage of original: 87.834%

Result is smaller

./zopflipng --prefix="zopfli_" static/images/2014/Jun/jenkins_batch.png

Optimizing static/images/2014/Jun/jenkins_batch.png

Input size: 21933 (21K)

Result size: 16255 (15K). Percentage of original: 74.112%

Result is smaller

./zopflipng --prefix="zopfli_" static/images/2014/Jun/jenkins_build_step.png

Optimizing static/images/2014/Jun/jenkins_build_step.png

Input size: 8184 (7K)

Result size: 6809 (6K). Percentage of original: 83.199%

Result is smaller

./zopflipng --prefix="zopfli_" static/images/2014/Jun/jenkins_config_git.png

Optimizing static/images/2014/Jun/jenkins_config_git.png

Input size: 57897 (56K)

Result size: 47164 (46K). Percentage of original: 81.462%

Result is smallerThe first result in the example output shows a case where Zopfli would actually have made the file bigger (because it was already compressed by PNGout, remember). This is nothing you have to worry about because it’s actually smart enough that it simply copies the original file in that case.

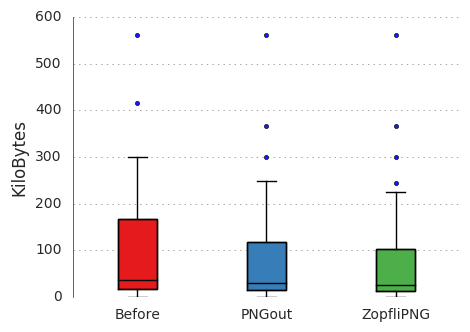

Comparing to both before any compression, and PNGout, yielded the following results:

| Mean relative size | |

|---|---|

| Before | 1.00 |

| PNGout | 0.84 |

| ZopfliPNG | 0.77 |

Box plot of results on all images:

Source files: before.csv, pngout.csv, zopfli.csv

And this is with the default arguments. It is possible squeeze yet a couple of more bytes out of this if you’re willing to wait longer.

Automate it with Make

Another joy of using a simple static site is that it is possible to compose regular tools to do useful things. Tools like Make. And we can use Make to build the site, as well as compressing images which have not already been compressed. You could do it manually for each new image that you add of course but be honest, you know that you’re gonna forget to do it at some point. So let’s automate it instead!

This is the Makefile that I use to build this site with, note that

public depends on $(PNG_SENTINELS), so I literally can’t forget to

compress any new images added:

.PHONY: help build server server-with-drafts clean zopfli

PNG_SENTINELS:= $(shell find . -path ./public -prune -o -name '*.png' -print | sed 's|\(.\+/\)\(.\+.png\)|\1.\2.zopfli|g')

help: ## Print this help text

@grep -E '^[a-zA-Z_-]+:.*?## .*$$' $(MAKEFILE_LIST) | awk 'BEGIN {FS = ":.*?## "}; {printf "\033[36m%-30s\033[0m %s\n", $$1, $$2}'

server: ## Run hugo server

hugo server

server-with-drafts: ## Run hugo server and include drafts

hugo server -D

build: public ## Build site (will also compress images using zopfli)

zopfli: $(PNG_SENTINELS) ## Compress new images using zopfli

clean: ## Remove the built directory

@rm -rf public

public: $(PNG_SENTINELS)

@rm -rf public

hugo

# Zopfli sentinel rule, assumes zopflipng binary is in the same folder

.%.png.zopfli: %.png

./zopflipng --prefix="zopfli_" $<

@mv $(dir $<)zopfli_$(notdir $<) $<

@touch $@

For best performance, run make with parallel jobs (change 4 to your

number CPUs): make -j4 zopfli.

To know which files have already been compressed without actually

running Zopfli on it again (which takes a while), sentinel files are

created with this pattern: .<imgfilename>.zopfli. Thus, the next

time around, zopfli is only invoked for files which have not already

been compressed, making it a one-time operation. And when everything

has already been compressed, you’ll just get this:

make: Nothing to be done for 'zopfli'.Other posts in the Migrating from Ghost to Hugo series:

- 2016-10-21 — Reduce the size of images even further by reducing number of colors with Gimp

- 2016-08-26 — Compress all the images!

- 2016-07-25 — Migrating from Ghost to Hugo